- Spark mongodb python example how to#

- Spark mongodb python example install#

- Spark mongodb python example update#

Any documents from df_from_files where they don’t exist in df_from_collection have been filtered out.

Spark mongodb python example update#

Spark mongodb python example how to#

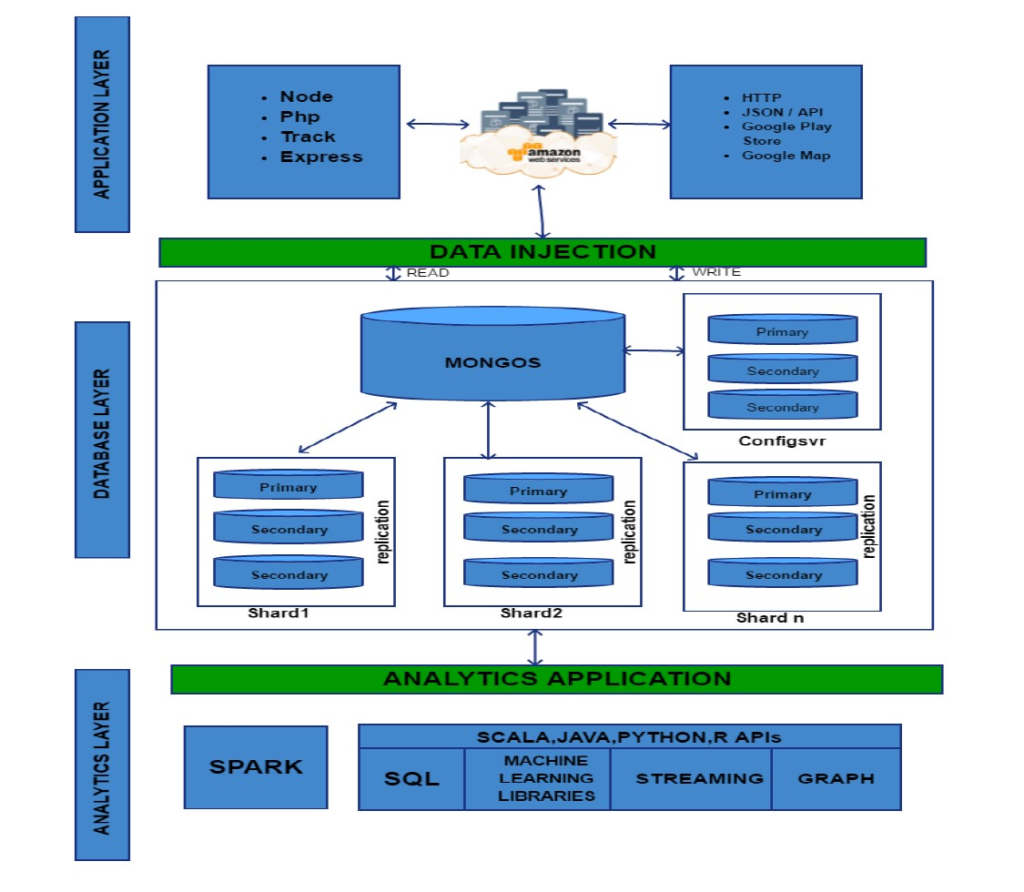

In this tutorial, I will show you how to configure Spark to connect to MongoDB, load data, and write queries. In my previous post, I listed the capabilities of the MongoDB connector for Spark. MongoDB and Apache Spark are two popular Big Data technologies.

Here we are going to view the data top 5 rows in the dataframe as shown below. MongoDB and Apache Spark - Getting started tutorial. Here we will read the schema of the stored table as a dataframe, as shown below. As shown above, we import the Row from class. Here we will create a dataframe to save in a MongoDB table for that The Row class is in the pyspark.sql submodule.

Heres how pyspark starts: 1.1.1 Start the command line with pyspark Locally installed version of spark is 2.3.1, if other versions need to be modified version number and scala version number pyspark -packages :mongo-spark-connector2.11:2.3. I want to set 'upsert' option to False \ 0. The python approach requires the use of pyspark or spark-submit for submission. I don't want to insert a new document when the document does not exist already. In a standalone Python application, you need to. I process bunch of log files, generate the output RDDs and am writing to my MongoDB collection through mongo-spark connector. Step 2: Create Dataframe to store in MongoDB The specifies the MongoDB server address (127.0.0.1), the database to connect (. Note: we need to specify the mongo spark connector which is suitable for your spark version. config('', ':mongo-spark-connector_2.12:3.0.1') \Īs shown in the above code, If you specified the and configuration options when you started pyspark, the default SparkSession object uses them. In this scenario, we are going to import the pyspark and pyspark SQL modules and create a spark session as below: The below codes can be run in Jupyter notebook or any python console.

Spark mongodb python example install#

0 kommentar(er)

0 kommentar(er)